This blog is a repost from https://neonmirrors.net/ written by Chip Zoller

Unless you’ve been living under a rock, you’re probably aware that Sigstore has been making waves in the software supply chain space—and that’s a great thing because we definitely need more in this area. With their Cosign tool, it allows for ensuring many of these practices are implemented such as image signing. Harbor, one of the most popular open source registries out there, recently added support for Cosign in version 2.5. It’s great to see such momentum around tools like these, and so in this blog I’m going to play around with this combination and how they can work together with Kyverno for robust software supply chain security. With Cosign, we sign an image and make the signature available to anyone with pull access so it can be verified. With Harbor, we can prevent images from being pulled unless they have some signature (and allow replication with signatures), and with Kyverno we can ensure, at runtime, that it is the signature we want. It’s a great combination, so let’s see this in action.

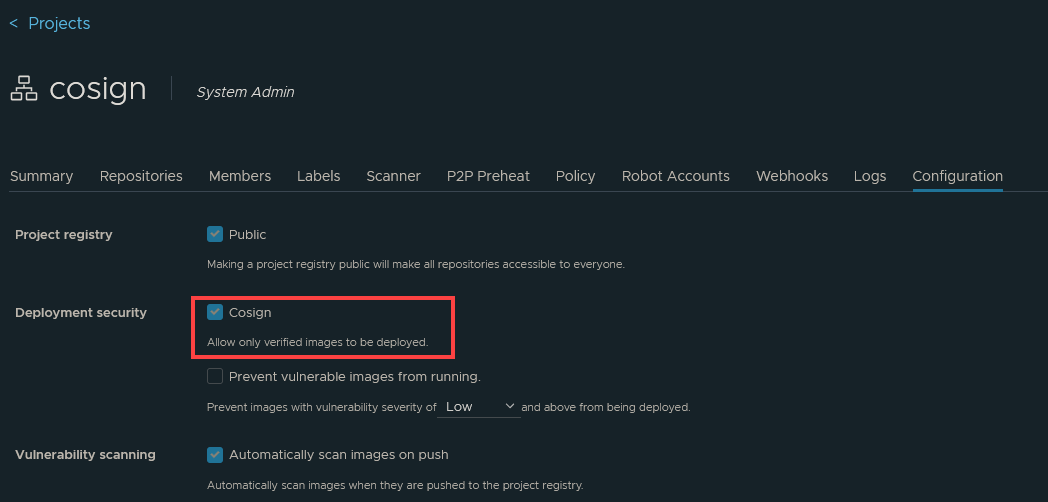

First thing you’ll see when you either install or upgrade (like I did) to Harbor 2.5 is a new Cosign section on a Project.

With this option checked, Harbor will prevent any images from being pulled from any repositories under this Project. However, it will not:

- Prevent images from being pushed or stored without signatures

- Actually sign images

The result of having an unsigned image in this project is if you were to go to your container runtime engine and try to pull, you would probably see a message like this:

Error response from daemon: unknown: The image is not signed in Cosign.Likewise, running this under a Pod in Kubernetes will also fail but with a slightly less-helpful message citing a “412 Precondition Failed” message:

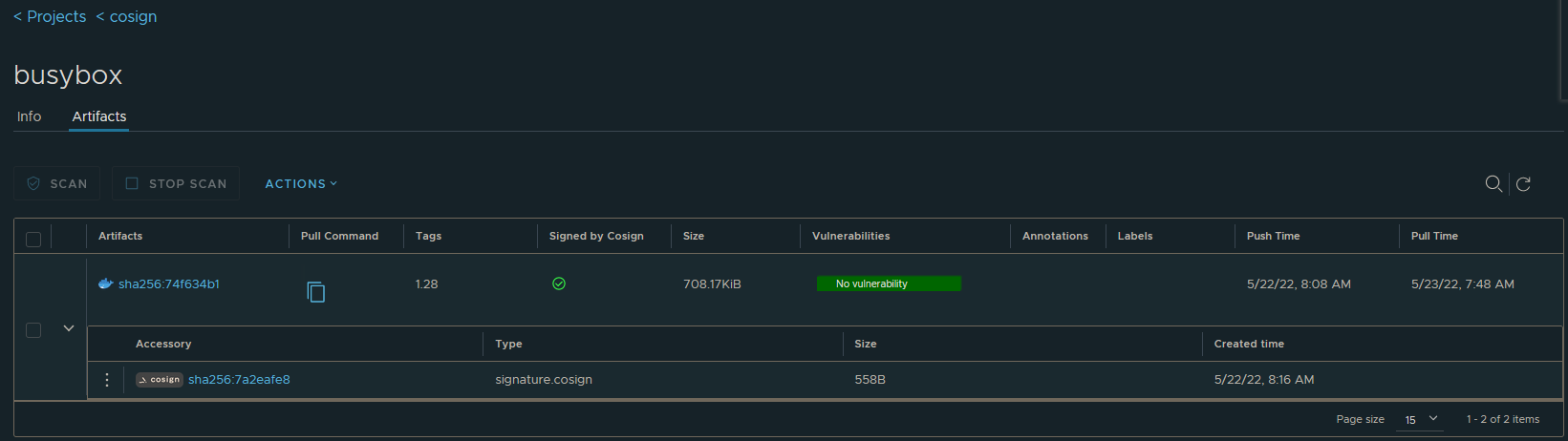

Failed to pull image "harbor2.zoller.com/cosign/tools:5.2.1": rpc error: code = Unknown desc = failed to pull and unpack image "harbor2.zoller.com/cosign/tools:5.2.1": failed to resolve reference "harbor2.zoller.com/cosign/tools:5.2.1": pulling from host harbor2.zoller.com failed with status code [manifests 5.2.1]: 412 Precondition FailedThat said, sign your image with Cosign and the UI will now reflect it being signed and also show the signature item as well.

Having images with signatures is a great step, but the next step is to ensure that the image has the correct signature. The criteria you use could be from simple to complex. There could just be one organization key used to sign everything. Or it could be that specific image repositories might have specific keys. Or maybe you have images that are signed by multiple parties. Whatever the case is, this is where layering in Kyverno helps to enforce runtime security based upon policy which you can define in a highly flexible manner. And because, as is probably well known at this point, Kyverno doesn’t need a programming language, this is quite easy and straightforward.

Let’s put Kyverno in the picture now using a very simple image verification policy.

I’ll create the following policy with one rule as shown below. This will use my public key to verify that any image coming from my internal registry and the cosign project has been signed with the key I expect.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: verify-images

spec:

validationFailureAction: enforce

rules:

- name: check-image-sig

match:

any:

- resources:

kinds:

- Pod

verifyImages:

- image: "harbor2.zoller.com/cosign/*"

key: |-

-----BEGIN PUBLIC KEY-----

MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAEpNlOGZ323zMlhs4bcKSpAKQvbcWi

5ZLRmijm6SqXDy0Fp0z0Eal+BekFnLzs8rUXUaXlhZ3hNudlgFJH+nFNMw==

-----END PUBLIC KEY-----If I now try to pull and run another image which, although it is in the cosign project and is signed, it’s not signed by a private key corresponding to the public key presented in the policy, Kyverno will see this and block the attempt. In the below, I’ve signed an alpine image with a different key, and this is the result I’d get when trying to run it.

Note: If your Harbor installation is signed from a root certificate authority which is a well-known, public authority, you’ll probably be fine. If it is signed by a self-signed or internal root CA like mine is, you’ll want to check out the appendix section in this article.

$ k run alpine --image harbor2.zoller.com/cosign/alpine:latest -- sleep 9999

Error from server: admission webhook "mutate.kyverno.svc-fail" denied the request:

resource Pod/default/alpine was blocked due to the following policies

verify-images:

check-image-sig: |-

failed to verify signature for harbor2.zoller.com/cosign/alpine:latest: .attestors[0].entries[0].keys: no matching signatures:

failed to verify signatureAs you can see, Kyverno returned no matching signatures for this image even though Harbor would allow it to be pulled.

Let’s now try with the busybox image I signed with a private key matching the public key found in the policy.

$ k run busybox --image harbor2.zoller.com/cosign/busybox:1.28 -- sleep 999

pod/busybox createdHarbor allowed the image to be pulled, but Kyverno checked the image beforehand to ensure it had a matching signature. It did and so it was successfully pulled and run as a Pod.

Pretty short and straightforward article, but it does show how tools like Harbor, Cosign, and Kyverno can all come together to help take the first few steps in the right direction of maintaining better software supply chain security in your Kubernetes clusters.

Appendix: Trusting Custom Root CAs

During the course of playing around with this combination, I discovered that some additional work was required in order to establish trust between Kyverno and my lab Harbor instance. Long story short, Kyverno doesn’t necessarily use the same trust as your Kubernetes nodes do and so you may need to give Kyverno the same certificate(s) as your nodes to establish trust. We’re hoping to make this easier in the future, but for now, these are the steps you’ll want to follow.

First, you’ll need to get your ca-certificates bundle in good shape by adding your internal root and regenerating the bundle. Most Linux distributions follow a similar process, but here it is for Ubuntu. With your combined ca-certificates in hand, the next step is to create a ConfigMap in your Kubernetes cluster which stores this as a multi-line value. The manifest you build should end up looking something like this below.

apiVersion: v1

kind: ConfigMap

metadata:

name: kyverno-certs

namespace: kyverno

data:

ca-certificates: |

-----BEGIN CERTIFICATE-----

MIIH0zCCBbugAwIBAgIIXsO3pkN/pOAwDQYJKoZIhvcNAQEFBQAwQjESMBAGA1UE

AwwJQUNDVlJBSVoxMRAwDgYDVQQLDAdQS0lBQ0NWMQ0wCwYDVQQKDARBQ0NWMQsw

CQYDVQQGEwJFUzAeFw0xMTA1MDUwOTM3MzdaFw0zMDEyMzEwOTM3MzdaMEIxEjAQ

BgNVBAMMCUFDQ1ZSQUlaMTEQMA4GA1UECwwHUEtJQUNDVjENMAsGA1UECgwEQUND

<snip>

-----BEGIN CERTIFICATE-----

MIIBbzCCARWgAwIBAgIQK0Z1j0Q96/LIo4tNHxsPUDAKBggqhkjOPQQDAjAWMRQw

EgYDVQQDEwtab2xsZXJMYWJDQTAeFw0yMjA1MTgwODI2NTBaFw0zMjA1MTUwODI2

NTBaMBYxFDASBgNVBAMTC1pvbGxlckxhYkNBMFkwEwYHKoZIzj0CAQYIKoZIzj0D

AQcDQgAEJxGhyW26O77E7fqFcbzljYzlLq/G7yANNwerWnWUKlW9gcrcPqZwwrTX

yaJZpdCWTObvbOyaOxq5NsytC/ubLKNFMEMwDgYDVR0PAQH/BAQDAgEGMBIGA1Ud

EwEB/wQIMAYBAf8CAQEwHQYDVR0OBBYEFDoT1GEM8NYfxSKBkSzg4rpY+xdUMAoG

CCqGSM49BAMCA0gAMEUCIQDDLWFn/XJPqpNGXcyjlSJFxlQUJ5Cu/+nDvtbTeUGA

NAIgMsVwBafMtmLQFlfvZsE95UYoYUV4ayH+OLTTQaDQOPY=

-----END CERTIFICATE-----There at the end you can see my root CA has been appended to the bundle.

Second, once you’ve created the ConfigMap in your cluster, you’ll want to modify your Kyverno Deployment so it mounts this ConfigMap and overwrites the internal bundle. Use the below snippet as a guide on how to do that.

apiVersion: apps/v1

kind: Deployment

metadata:

name: kyverno

namespace: kyverno

spec:

template:

spec:

containers:

- args:

- --autogenInternals=true

- -v=4

image: ghcr.io/kyverno/kyverno:v1.7.0-rc1

name: kyverno

volumeMounts:

- mountPath: /.sigstore

name: sigstore

- name: ca-certificates

mountPath: /etc/ssl/certs/ca-certificates.crt

subPath: ca-certificates.crt

<snip>

volumes:

- name: sigstore

- name: ca-certificates

configMap:

name: kyverno-certs

items:

- key: ca-certificates

path: ca-certificates.crtObviously this is a highly abridged manifest, but it shows how you’ll need to add a volume which mounts the ConfigMap and how to present that as the ca-certificates.crt file which will overwrite the one supplied by default in the Kyverno container.

Now you should be able to work with your registry in a fully secured way. Last thing to note is you may possibly need to make some adjustments in your cluster DNS to resolve internal DNS records prior to CoreDNS forwarding them to external resolvers. If this is the case, you’ll want to update your Corefile definition in that ConfigMap appropriately. For example, in mine I had to add a section to keep those queries local.

zoller.com:53 {

errors

cache 30

forward . 192.168.1.5

}And that’s about it. Hope you find this helpful.

Thanks, Chip!

Keep visiting Nirmata for more info and news regarding software supply chain security, a matter of significant importance to DevOps teams everywhere. We’ve built Kyverno to make it all easier.

Sorry, the comment form is closed at this time.